Speed Up Your Indexing: Mastering Crawl Budget and Troubleshooting Roa…

페이지 정보

본문

Speed Up Your Indexing: Mastering Crawl Budget and Troubleshooting Roadblocks

→ Link to Telegram bot

Who can benefit from SpeedyIndexBot service?

The service is useful for website owners and SEO-specialists who want to increase their visibility in Google and Yandex,

improve site positions and increase organic traffic.

SpeedyIndex helps to index backlinks, new pages and updates on the site faster.

How it works.

Choose the type of task, indexing or index checker. Send the task to the bot .txt file or message up to 20 links.

Get a detailed report.Our benefits

-Give 100 links for indexing and 50 links for index checking

-Send detailed reports!

-Pay referral 15%

-Refill by cards, cryptocurrency, PayPal

-API

We return 70% of unindexed links back to your balance when you order indexing in Yandex and Google.

→ Link to Telegram bot

Telegraph:

Imagine your website as a hidden gem, brimming with valuable content, yet undiscovered by search engines. Frustrating, right? The key to unlocking its full potential lies in understanding and optimizing search engine indexing. This is crucial for boosting your website’s visibility and driving organic traffic.

Getting your pages indexed efficiently is paramount for success. Smart strategies for improving how search engines find and understand your content can significantly impact your search engine rankings. This involves a multifaceted approach, focusing on both technical aspects and content quality.

Understanding the Crawl Budget

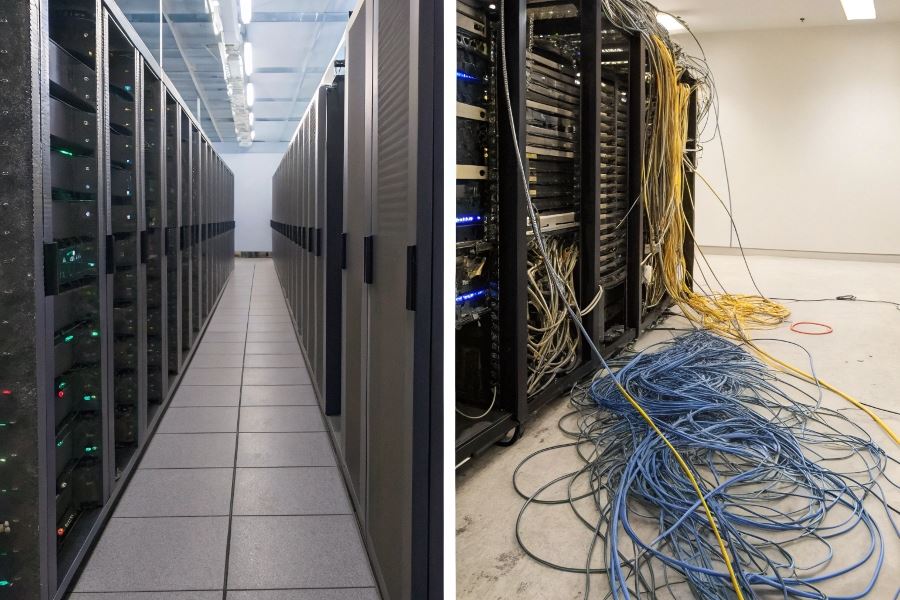

Search engines like Google have a limited capacity for crawling websites. This is known as the crawl budget – essentially, the number of pages a search engine bot (crawler) can visit from your site within a given timeframe. A poorly structured website can quickly exhaust this budget, leaving many pages unindexed. For example, a site with thousands of thin, low-quality pages might waste the crawler’s time on irrelevant content, preventing it from reaching your most important pages. Conversely, a well-structured site with clear internal linking guides the crawler efficiently, maximizing your crawl budget.

Architecting for Optimal Crawlability

Website architecture plays a pivotal role in indexing efficiency. A logical and intuitive site structure, using clear internal linking, helps search engine bots navigate your website effortlessly. Think of it as creating a roadmap for the crawlers. For instance, a hierarchical structure, with clear category and sub-category pages, makes it easy for crawlers to understand the relationship between different pages. Avoid complex URLs, broken links, and excessive redirects, as these hinder crawlability and waste precious crawl budget. Regularly reviewing your sitemap and robots.txt file is crucial for ensuring your site is easily accessible to search engine crawlers.

Unlocking Search Visibility

Ever feel like your website is shouting into the void, despite your best content creation efforts? The problem might not be your content itself, but how effectively search engines can find and understand it. Getting your pages indexed efficiently is crucial, and mastering a few key technical SEO strategies can dramatically improve your search engine visibility. Improving your site’s discoverability requires a nuanced understanding of how search engine crawlers navigate your website, and implementing indexing efficiency tips is key to this process.

Let’s dive into three critical technical SEO factors that directly impact how quickly and thoroughly search engines index your content. These factors, when optimized, can significantly boost your organic traffic.

XML Sitemaps: A Roadmap for Crawlers

XML sitemaps act as a detailed roadmap for search engine crawlers, guiding them through the most important pages on your website. A well-structured sitemap lists all your URLs, including their last modification date and priority level. This helps search engines prioritize which pages to crawl first, ensuring your most valuable content gets indexed quickly. Submitting your XML sitemap to Google Search Console https://dzen.ru/psichoz/ and Bing Webmaster Tools https://www.bing.com/webmasters/ is essential. Think of it as sending a direct invitation to the search engine bots – "Come on in, these are the pages you should see first!" Regularly updating your sitemap is also vital, especially after significant site changes or new content additions. Failing to do so can leave your new content languishing in the shadows, unseen by search engines.

Robots.txt: Controlling Crawler Access

The robots.txt file acts as a gatekeeper, controlling which parts of your website search engine crawlers can access. While seemingly simple, misconfiguring this file can severely hinder indexing. A poorly written or improperly placed robots.txt file can inadvertently block crucial pages, preventing them from ever appearing in search results. For example, accidentally blocking your entire website with a single misplaced line can be disastrous. Always test your robots.txt file using tools like Google Search Console’s robots.txt Tester https://dzen.ru/psichoz/robots-test to ensure it’s functioning as intended. Remember, the goal isn’t to block everything; it’s to strategically manage crawler access, ensuring they focus on your most valuable content while avoiding areas that might confuse or mislead them.

Crawl Errors and 404s: Fixing Broken Links

Broken links and 404 errors are like potholes on your website’s digital highway. They disrupt the crawler’s journey, leading to wasted crawl budget and potentially harming your overall search engine ranking. Regularly monitoring your website for crawl errors using Google Search Console is crucial. Identifying and fixing these issues promptly is vital for maintaining a smooth and efficient crawling experience. A high number of 404 errors signals to search engines that your website is poorly maintained and may contain unreliable information. This can negatively impact your rankings and user experience. Implementing a 301 redirect strategy for broken links is a best practice, guiding users and crawlers to the correct pages. This ensures a seamless user experience and prevents the loss of valuable link equity.

By meticulously addressing these three technical SEO factors, you’ll significantly improve your website’s indexing efficiency, leading to better search engine visibility and ultimately, more organic traffic. Remember, consistent monitoring and optimization are key to long-term success.

Unlock Search Visibility

Ever feel like your amazing content is lost in the digital wilderness? You’ve poured your heart and soul into crafting compelling pieces, yet your organic search performance remains stubbornly stagnant. The problem might not be your content itself, but how effectively search engines can find and understand it. This is where understanding the nuances of indexing becomes crucial. Improving indexing efficiency tips, such as focusing on site architecture and technical SEO, can significantly impact your visibility.

Creating truly exceptional content is only half the battle. Search engines need to easily crawl and index your pages to understand their relevance. This means focusing on high-quality, relevant, and unique content that genuinely satisfies user search intent. Think about it: a thin, keyword-stuffed page offers little value to a user, and search engines recognize this. Instead, aim for comprehensive, insightful pieces that naturally incorporate your target keywords. For example, a blog post about "best running shoes" should go beyond a simple product list; it should delve into different running styles, foot types, and training advice, naturally incorporating relevant keywords throughout the text. This holistic approach signals to search engines that your content is authoritative and valuable.

Internal Linking Mastery

Internal linking is often overlooked, but it’s a powerful tool for boosting indexability. Think of your website as a city, with each page representing a building. Internal links act as roads, guiding search engine crawlers through your site and connecting related content. Strategic internal linking helps search engines understand the hierarchy and relationships between your pages, improving overall site navigation and crawlability. For instance, a blog post about "beginner yoga poses" could link to articles on "yoga mats for beginners" or "yoga for flexibility," creating a natural flow and enhancing the user experience. Don’t just link randomly; ensure the links are contextually relevant and add value to the user’s journey.

Google Search Console Insights

Monitoring your indexing performance is essential. Google Search Console https://dzen.ru/psichoz/about provides invaluable data on how Google sees your website. Regularly check your index coverage report to identify any indexing issues, such as crawl errors or blocked URLs. Analyzing this data allows you to proactively address problems and ensure your content is readily accessible to search engines. For example, if you notice a significant number of "server errors," you’ll know to investigate your website’s server configuration. By consistently monitoring and analyzing your indexing performance, you can fine-tune your SEO strategy and maximize your organic search visibility. This iterative process of optimization is key to long-term success.

Telegraph:Speed Up Your Search Visibility: Mastering Search Engine Indexing

- 이전글What Are Corporate Bonds and Are The company Worth the Investment? 25.07.09

- 다음글야동뱅크주소イ 직시 (1080p_26k)야동뱅크주소イ #hvu 25.07.09

댓글목록

등록된 댓글이 없습니다.